TL;DR

- Vibe coding uses LLMs to generate application code based on natural language prompts—but may introduce code the developer didn’t fully review

- This creates unintended privacy and security risks, especially in front-end and browser-facing code

- Client-side behaviors like data collection, tracking, and third-party script loading can slip through undetected

- CISOs need visibility into generated code and runtime client-side behaviors to stay compliant with PCI DSS, HIPAA, and GDPR

- Feroot detects unauthorized scripts and map client-side risks back to compliance frameworks

What is vibe coding, and how does it work?

Vibe coding is a new development paradigm where large language models (LLMs) generate code from natural language prompts. The developer’s role shifts from writing every line to guiding the model toward a desired output—iterating through prompts, feedback, and high-level direction.

Examples of vibe coding tools and workflows:

- GitHub Copilot generating React or Vue components from comments

- GPT-4 or Gemini responding to “Build a login form with validation”

- AI auto-filling CSS, data fetching logic, or DOM manipulation based on vague intent

Vibe coding speeds up development dramatically—but that speed comes at a cost: developers may not fully understand, review, or audit all the code being deployed—especially client-side.

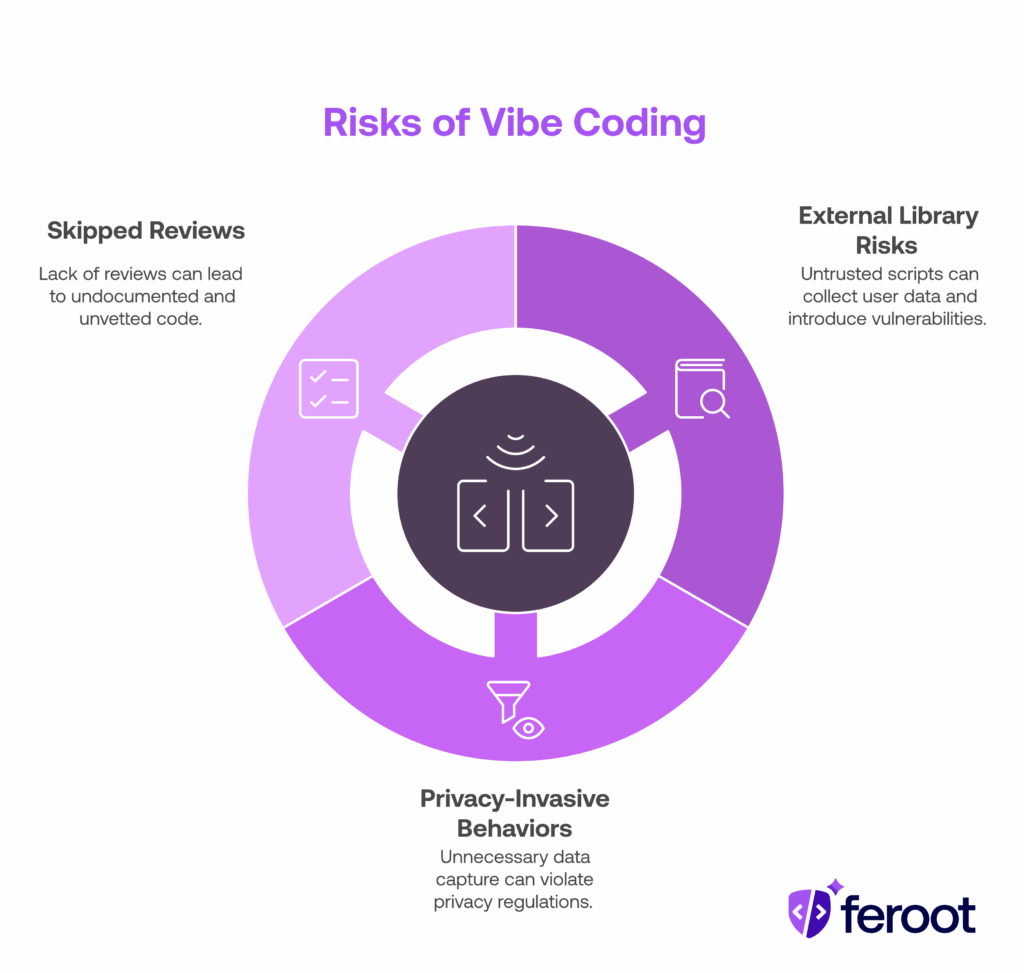

Why does vibe coding create new privacy and compliance risks?

Because vibe coding emphasizes speed and abstraction over line-by-line control, it introduces three major risks for security and compliance teams:

1. Generated code may import external libraries or load third-party scripts

LLMs often suggest or include packages (e.g., analytics SDKs, UI libraries) that load code dynamically in the browser—without security vetting.

Risk: Untrusted scripts embedded into your site can collect user data, trigger pixel tracking, or introduce vulnerabilities.

2. Code may include privacy-invasive behaviors by default

Generated front-end logic may capture user inputs, mouse events, or telemetry for debugging—even if unnecessary for functionality.

Risk: These behaviors can violate HIPAA, GDPR, or CCPA if PII or PHI is logged or transmitted.

3. Developers may skip traditional reviews

Because vibe coding “feels” like prototyping or exploratory work, it often bypasses secure code review, static analysis, or privacy assessments.

Risk: Shadow code and unvetted logic reach production without full documentation or compliance mapping.

What kinds of client-side issues can LLM-generated code introduce?

Here are common examples of risky behaviors introduced by LLM-generated front-end code:

| Issue | Risk |

| Auto-generated form validation logs user inputs to console or remote server | Potential PHI or PII exposure under HIPAA or GDPR |

| Use of third-party scripts without Subresource Integrity (SRI) | Violates PCI DSS 6.4.3 and increases supply chain attack surface |

| Event listeners that track mouse movement or keystrokes for “UX improvement” | May violate GDPR if collected without consent |

| Inclusion of analytics SDKs without opt-in consent | Triggers CCPA, GDPR, or HIPAA regulatory scrutiny |

| Code includes default console.log() or debug tools capturing sensitive fields | Exposes data to internal users or threat actors during audits |

These issues are hard to detect post-deployment—especially if generated code is committed without documentation or peer review.

What compliance frameworks are most impacted by LLM-generated web code?

Client-side code that’s generated via LLMs can introduce silent violations across multiple compliance regimes:

PCI DSS 4.0

- Requirement 6.4.3: Requires organizations to manage script integrity and ensure external scripts are authorized

- Requirement 11.6.1: Requires detection of unauthorized changes to scripts—LLM-suggested code may bypass change controls entirely

HIPAA

- Code that captures or logs user input fields, authentication data, or form submissions can be considered PHI

- If this happens in the browser without consent, it’s a privacy violation—even if unintentional

GDPR & CCPA

- Generated scripts that track users (e.g., via heatmaps, session replay, telemetry) without consent can trigger enforcement actions

- LLMs may suggest default implementations that do not respect opt-in/out frameworks

The Tea App Breach: A Cautionary Tale for Vibe Coding and Client‑Side Risk

What happened in the Tea app breach?

In late July 2025, the Tea dating app—marketed as a safe, women-only platform—suffered two major data breaches:

- First breach (~July 25): An unsecured Firebase storage exposed about 72,000 images, including 13,000 selfies and ID photos, plus 59,000 public app images from posts and messages. Data dated from legacy accounts before February 2024.

- Second breach: Shortly after, a separate database leaked 1.1 million private direct messages dating from 2023 to that week—covering deeply personal topics like abortion, infidelity, and contact-sharing. The DM feature was temporarily disabled.

These leaks sparked regulatory scrutiny, multiple class-action lawsuits, and widespread user harm.

How Feroot could have prevented or mitigated this breach

Feroot’s client-side security platform could have protected Tea users in several ways:

Visibility into unvetted AI-generated or legacy scripts: In a vibe coding workflow, generated front-end code may include Firebase integrations or data handlers without encryption. Feroot monitors and flags script behaviors and outbound data flows—especially those uploading images or messages—not just code syntax.

- Detection of unauthorized third-party access: Even if Tea used a misconfigured Firebase bucket, Feroot would have alerted on unexpected script or network traffic from users’ browsers to insecure endpoints.

- Block privacy-invasive behavior real-time: Feroot can enforce policies preventing export of image data, form input, or messaging content unless properly approved—stopping unauthorized telemetry and storage leaks.

- Audit-ready mapping to compliance frameworks: Detected script behaviors could be tied back to PCI DSS 6.4.3 / 11.6.1 and GDPR/CCPA controls, with logs ready for breach response and incident review.

In short, Feroot offers a runtime lens into actual user‑browser activity—catching behavior that static review or backend scanning would have missed—and helps prevent data exposure rooted in auto-generated or legacy front‑end logic.

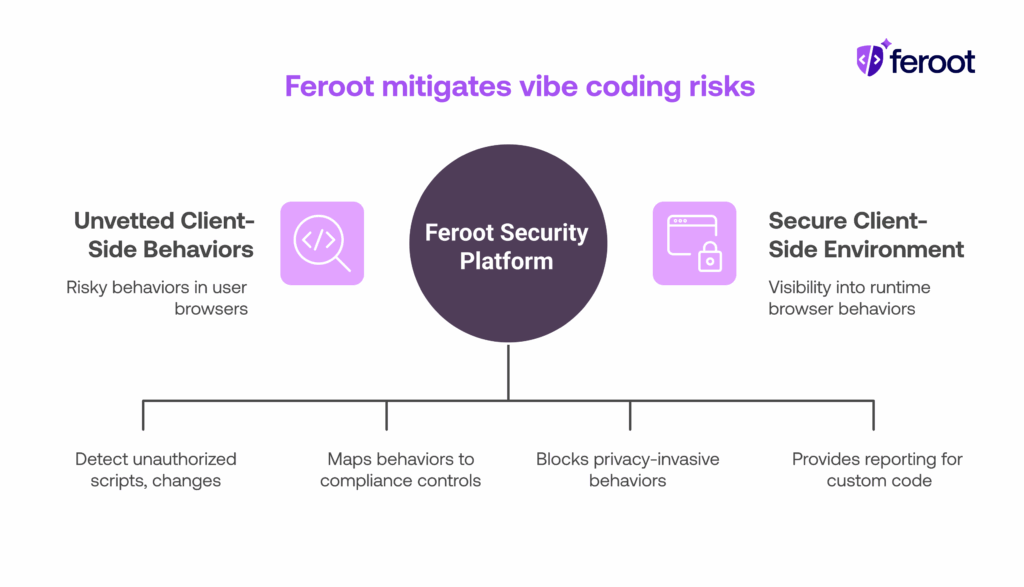

How does Feroot detect risks introduced by vibe coding?

Feroot is a client-side security platform that continuously monitors front-end behaviors and maps them to compliance risks—even when code is generated via LLMs.

Key capabilities:

- Detects unauthorized scripts and client-side changes in real time

- Maps risky behaviors to controls in PCI DSS 4.0, HIPAA, and GDPR

- Blocks privacy-invasive behaviors like tracking scripts, unvetted third-party requests, and dynamic injections

- Provides audit-ready reporting for both custom and auto-generated code

- Works without needing developer annotations or static reviews

Why this matters for vibe coding:

- LLM-generated code can behave unexpectedly

- Developers may not flag every third-party script

- Your compliance posture depends on runtime behavior—not just source control

- Feroot gives security teams visibility into the actual behaviors happening in users’ browsers, not just what’s visible in the code editor.

What should CISOs and security leaders do to reduce exposure?

To manage the growing risks introduced by vibe coding, CISOs and AppSec leaders should:

1. Treat LLM-generated code like any other production input

2. Prioritize runtime client-side visibility

- Static analysis tools won’t catch browser-specific behavior

- Use platforms like Feroot to monitor dynamic script behavior, changes, and data flows

3. Lock down third-party script usage

- Enforce policies around Subresource Integrity (SRI) and script authorization

- Block unauthorized domains from executing in the browser

4. Update your compliance controls for PCI DSS 4.0 and HIPAA

- Use PCI DSS 6.4.3 and 11.6.1 as triggers for script monitoring requirements

- Review your PHI handling for any LLM-generated UI or form logic

5. Educate developers on the risks of vibe coding

- Encourage security prompts in AI tools (e.g., “only use vetted libraries”)

- Offer secure-by-default code snippets or wrappers to reduce exposure

FAQ

Is vibe coding dangerous for production environments?

Not inherently—but it increases the risk of deploying unvetted, privacy-impacting code if used without oversight. Especially in client-facing apps.

Can vibe coding introduce third-party scripts without me knowing?

Yes. LLMs frequently suggest common analytics or UI libraries that load external JavaScript—sometimes from risky or unauthorized sources.

How does Feroot protect against vibe coding risks?

Feroot monitors runtime browser behavior, detects unauthorized scripts, flags privacy violations, and maps issues to compliance frameworks—even when code is auto-generated.

Does PCI DSS 4.0 require visibility into browser-side scripts?

Yes. Requirement 6.4.3 mandates authorization and integrity controls for external scripts. 11.6.1 requires monitoring for unauthorized script changes.

Can I use LLMs for frontend development safely?

Yes—with the right guardrails. Review generated code, monitor client-side activity, and block unapproved behaviors post-deployment.

Conclusion

Vibe coding unlocks developer speed—but creates blind spots for privacy, compliance, and security.

Security teams need to adapt—fast:

- Generated code can include risky behaviors without developer intent

- Compliance frameworks increasingly scrutinize browser-side activity

- Client-side visibility is essential, especially under PCI DSS 4.0 and HIPAA

- Feroot helps CISOs monitor, control, and document client-side behaviors—whether written by humans or generated by AI.