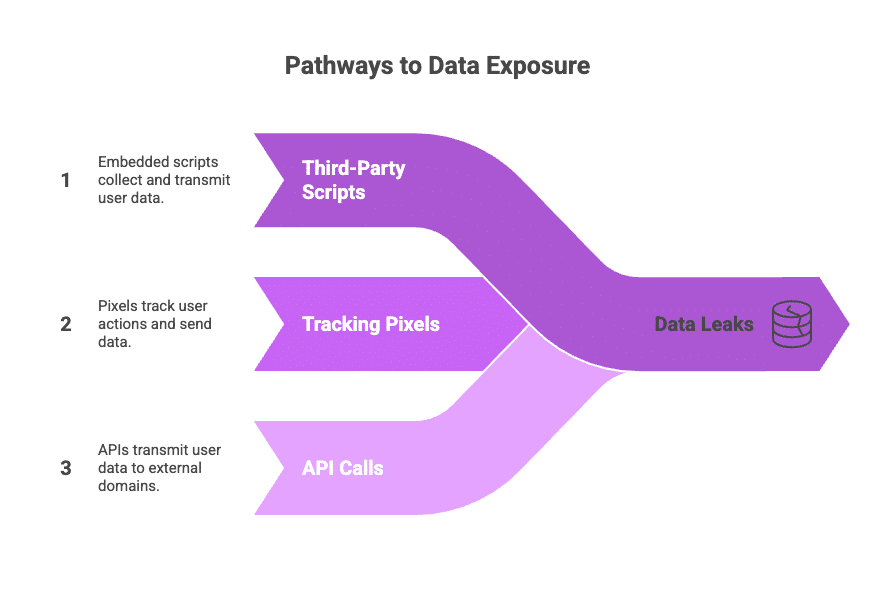

Website data leaks don’t require hackers. They happen when legitimate scripts, analytics pixels, and chat widgets transmit sensitive data to third parties through routine operations. Traditional security tools miss these leaks because they monitor server-side traffic while the exposure occurs in customer browsers.

This visibility gap is why organizations use client-side monitoring platforms to detect browser-level data flows that security tools can’t see. This article explains the technical challenge, regulatory requirements, and how continuous monitoring addresses what manual audits cannot.

What you’ll learn in this article:

- Why most data leaks stem from browser-side transfers by approved scripts on high-risk pages like portals and payment flows, not from external attacks

- How regulations like GDPR, HIPAA, and PCI DSS require continuous visibility into what data leaves your site and which third parties receive it

- Which technical controls (client-side monitoring, CSP, script governance) and evidence regulators expect to see during audits and investigations

- How to build a phased prevention program that moves from discovery to governance, with manual vs. automated approaches to maintaining compliance

Understanding website data leaks

To understand data leaks, we first need to see them separately from breaches. A breach involves someone getting into a system. But a leak happens when routine features send more data, or send it more widely, than anyone intended.

For example, a site may load an analytics tool, trigger a pixel, or pass form details through an API. These actions look ordinary because, indeed, they are ordinary. Nothing in the behavior stands out. And that is why they get missed.

Traditional web visibility tools overlook this movement because they watch what leaves the server. But many leaks happen in the browser instead. Since the server never sees the request, the control never gets a chance to act.

This pattern shows up across industries. Most hospital websites send visitor information to third parties, and only a portion discloses the practice.

The pattern is consistent: ordinary features can share more than expected when their behavior goes unreviewed.

With that context in place, let’s take a deeper look at what makes security breaches different than data leaks.

Data Leaks vs. Security Breaches: Key Distinctions

| Characteristic | Data Leak | Security Breach |

| How it happens | Legitimate scripts transmit data to third parties through normal website functions | Unauthorized access by an external attacker through vulnerability exploitation |

| Visibility to traditional tools | Often invisible; happens client-side in the browser, bypassing server monitoring | Detected by SIEM, IDS/IPS, and server-side security tools |

| Common causes | Marketing pixels, analytics tags, chat widgets, and session replay on sensitive pages | SQL injection, malware, credential theft, ransomware |

| Data path | User’s browser connects directly to third-party endpoints | Attacker gains access to internal systems or databases |

| Intent | Usually unintentional; scripts operate as designed, but in the wrong context | Malicious; deliberate attempt to steal or compromise data |

| Discovery | Requires client-side monitoring and network analysis of browser behavior | Detected through logs, alerts, anomaly detection, or incident response |

| Regulatory view | Impermissible disclosure (HIPAA), unlawful processing (GDPR), unauthorized sharing (CCPA) | Data breach requiring notification under breach laws |

| Prevention | Script governance, CSP, field masking, continuous monitoring | Firewalls, patching, access controls, encryption |

Your data leak attack surface

Web risk doesn’t always manifest dramatically. Often, it shows up in familiar tools like analytics tags, chat widgets, and optimization scripts that shuffle sensitive data off your website and out of your control. Understanding this surface is the first step.

Components to consider

- First-party scripts you control: your own JavaScript, error-tracking libraries, and logging tools.

- Third-party marketing and analytics tags: e.g., Google Analytics, Facebook Pixel, attribution services.

- Customer support tools: chat widgets, feedback forms, and session replay SDKs.

- Advertising and optimisation elements: retargeting networks, A/B-testing frameworks.

- Tag-management systems: centralised containers that load many scripts with minimal oversight.

- Hidden fourth parties: your approved vendor loads additional scripts that then reach out to new domains.

For example, a routine marketing tag audit discovered a Meta/analytics pixel firing on authenticated pages, transmitting appointment types and hashed identifiers to ad platforms. The incident prompted a privacy review and a state inquiry.

But it also made one point clear: the issue wasn’t the pixel itself, but the lack of visibility into where it was running.

Fortunately, the fix was simple. The team removed the tag from authenticated pages, tightened the data-collection rules, and updated vendor contracts to define clear handling limits.

It’s a pattern that repeats in many environments, and it usually points to the same next step: getting a clear inventory of the scripts that run where, and what they touch.

To do that, identify which scripts run in authenticated contexts, what fields they read, and which external domains receive the data. If a single audit reveals unexpected flows, immediately escalate the issue to the policy and contractual controls teams.

Assessment questions to ask together

- Can you list every external domain your site sends data to?

- For each script, what data is accessed, and where is it transmitted?

- Are all fourth-party relationships documented and vetted?

Website data leak prevention strategy

Preventing website data leaks is less about locking down everything and more about applying control and visibility in layers. Each layer strengthens the next. What we’ve learned working with hundreds of teams is that leaks rarely occur because someone ignored security; they happen because the browser is doing more than anyone realized.

Minimize data collection

Every field you collect carries exposure potential. Healthcare and financial websites often ask for identifiers that aren’t strictly necessary for the transaction. But reducing what’s collected and where cuts your attack surface immediately.

Form-level rules help here. Redact or hash sensitive entries, and shift tracking logic to the server side when you can.

Control third-party access

Scripts that power analytics, ads, or chat should pass through a structured authorization process that includes security, legal, and privacy reviews.

For example, a major retailer reduced its client-side risk by 80% after introducing a simple script intake form. No new tag could go live without an approved business justification and a signed Data Processing Agreement restricting reuse. This allowed the team to align each script with a defined purpose and a documented level of oversight.

Governance through tag-management workflows is risk containment.

Technical controls

A strong Content Security Policy (CSP) acts as a guardrail, whitelisting only approved domains.

Combine CSP with data-masking scripts that automatically redact PII and iframe sandboxing for third-party widgets. These measures compartmentalize exposure if something misbehaves.

Monitor and detect

Visibility should continue after deployment, because scripts evolve over time. Vendors release updates often, and those changes can shift what a script does or the data it touches.

One reason PCI DSS v4.0.1 Requirement 6.4.3 requires authorization, integrity, and inventory for scripts on payment pages is that skimming attacks routinely exploit unmonitored JavaScript in checkout flows. The same logic applies to portals and applications that handle PHI or financial data.

Privacy compliance

Policy alignment must mirror what your site actually does. Many privacy policies list generic partners but omit real-time tracking flows. Effective programs tie consent enforcement to script execution and support subject rights by maintaining an up-to-date inventory of all data recipients.

Detection Requirements

Most data-leak investigations start with the same question: how long has this been happening? The answer usually reveals that visibility was at best partial. Traditional DLP tools monitor what leaves the server; they don’t see what the browser sends.

The first layer of visibility comes from network activity. Every external API call, embedded request, or data-filled URL parameter must be observable.

Then, there’s form-field access, understanding which scripts read which fields. This is where many leaks begin. For example, in one hospital case, a new chat widget started reading patient form data the week it was deployed. No one had noticed until network logs showed POST requests to an unfamiliar analytics domain.

Next is data-transmission analysis: the types of data being sent, how frequently, and whether those flows have changed. A sudden spike in outbound calls from a specific script often signals a vendor update or a new dependency.

The issue is compounded by the growing number of hidden or “fourth-party” scripts loaded by your approved vendors.

Continuous monitoring solves what periodic audits cannot. Point-in-time scans miss the fact that vendors push updates constantly, sometimes daily. According to PCI DSS v4.0.1 Requirement 6.4.3, organizations handling payments must monitor all scripts that load on payment pages, precisely because of this dynamic behavior. The same logic applies to healthcare, finance, and retail sites that handle sensitive identifiers.

If your goal is to prevent data leaks from websites, monitoring cannot be optional. It is the control that tells you whether everything else is actually working.

Privacy Compliance Connection

When privacy teams and CISOs discuss website compliance, they often focus on consent banners and cookie policies. But those controls mean little if you don’t actually know what data leaves your site. Every modern privacy law, whether GDPR, HIPAA, or PCI DSS, rests on one central principle: accountability through visibility. If you can’t identify what is leaking and where, you can’t claim compliance.

Regulatory Requirements for Website Data Flows

| Framework | Core Requirement | What Triggers Violation | Enforcement Reality |

| GDPR (EU) | Data minimization (Article 5) and records of processing (Article 30). Disclose every recipient (Articles 13-14). | Transmitting identifiers or analytics to unlisted third parties constitutes undeclared processing. | The average fine for inadequate disclosure exceeds €4.2 million. Recent enforcement targets undisclosed tracking. |

| CCPA/CPRA (California) | Define “sharing” and “selling” broadly to include analytics and ad pixels. Requires disclosure and opt-out for cross-context behavioral advertising. | Any transfer of personal data to third parties for behavioral advertising without proper “Do Not Sell or Share” links. | Over 60% of U.S. commercial websites using behavioral analytics fail to display accurate opt-out links (2024 analysis). |

| HIPAA (Healthcare) | OCR December 2022 and June 2024 bulletins: tracking technologies on patient portals can constitute impermissible disclosure of PHI. | Pixels or analytics collecting patient identifiers or visit data without BAA. | Novant Health and Community Health Network cases led to multimillion-dollar settlements. |

| PCI DSS v4.0.1 (Payments) | Requirement 6.4.3 mandates inventory and authorization for all scripts on payment pages. | Unauthorized scripts on checkout or payment pages that can access cardholder data. | Direct response to client-side skimming incidents that compromised millions of payment records. |

| State Privacy Laws (VCDPA, CTDPA, etc.) | Document data-sharing relationships and offer consumers mechanisms to limit transfers. | Undisclosed sharing with third parties or lack of consumer control mechanisms. | Emphasis on disclosure and consent across all U.S. state frameworks. |

Privacy frameworks are, at their core, visibility frameworks. They assume you know what data your systems transmit, to whom, and for what purpose. Without that knowledge, transparency becomes guesswork. Continuous discovery closes that gap by keeping the real data flows aligned with what your policies promise.

GDPR

Under the GDPR, the foundation is data minimization and lawful processing. Article 5 requires collecting only what’s necessary, and Article 30 compels controllers to maintain records of all data-sharing activities.

When data from a website reaches third parties that were not part of those records, regulators treat that movement as processing that needs to be documented and disclosed.

Articles 13 and 14 outline those disclosure expectations. Recent enforcement actions highlight a consistent theme: regulators look closely at whether organizations understand and describe their tracking tools accurately. The focus is less on volume of data and more on clarity about where it goes.

CCPA and CPRA

The CCPA and CPRA extend the same logic. They define “sharing” and “selling” broadly enough to cover analytics and advertising pixels. Any transfer of personal data to a third party for cross-context behavioral advertising requires disclosure and an opt-out mechanism.

Yet a 2024 analysis by Cybersimple found that over 60 % of U.S. commercial websites using behavioral analytics still fail to display accurate “Do Not Sell or Share” links. The finding highlights how easy it is for these requirements to drift as sites evolve, especially when data flows change faster than policy updates.

HIPAA

With HIPAA, the principle is straightforward: if a tool sees PHI, it falls under HIPAA’s rules. That’s the lens HIPAA uses when it evaluates any tracking tool running in a patient portal.

This simply means that when a script touches identifiers or visit data in an authenticated space, OCR treats that activity the same way it treats any other interaction involving PHI.

The bulletins from 2022 and the 2024 update simply makes this more explicit. If pixels or analytics tools collect patient identifiers or visit data, covered entities must execute a Business Associate Agreement (BAA) or disable those tools entirely.

The writing on the wall is clear, HIPAA expects tracking tools to behave in ways that match your documented privacy practices.

PCI DSS v4.0.1

PCI DSS v4.0.1 addresses the same risk in a different context: cardholder data. Requirement 6.4.3 mandates an inventory and authorization process for all scripts executing on payment pages, ensuring they are integrity-checked and approved.

The goal is simple; know what runs in your checkout flow and ensure it aligns with the controls already in place.

Even the state privacy laws follow a similar pattern. From Virginia’s CDPA to Connecticut’s CTDPA, the focus remains on transparency, by documenting data-sharing relationships and giving consumers clear ways to limit how their information is used.

Across these frameworks, the common thread is visibility. You need to know what data moves, where it goes, and why. Continuous discovery keeps that picture accurate and aligned with policy.

Industry-specific risks

Different industries headline web data leaks in various ways, but the pattern is consistent. Client-side scripts and third-party tools become unexpected conduits for sensitive data. Let’s see how they span across industries.

Healthcare

The U.S. Department of Health and Human Services (HHS OCR) confirmed that tracking technologies on patient portals can expose protected health information (PHI), triggering HIPAA violations.

Financial services

Banks and fintechs use analytics SDKs in account portals and loan forms. When these tools transmit applicant data to external vendors, the exposure extends beyond regulatory control.

E-commerce

Retail sites often share purchase history and behavioral data with ad networks. Studies show a single third-party script can introduce up to eight downstream services that receive data indirectly.

SaaS platforms

Usage-tracking and in-app analytics may transmit configuration details or client data to external telemetry vendors. These are often classified as “operational metrics,” yet may include customer identifiers subject to privacy regulations.

Education

Ed-tech platforms frequently handle student identifiers covered under FERPA. Any plugin that sends data to external domains effectively expands the school’s legal exposure. The U.S. Department of Education has reiterated that third-party vendors that handle student PII are subject to FERPA obligations.

Real-world scenarios

Many teams find that the most revealing moments come from ordinary tools doing something slightly unexpected. A script runs in a new context. A vendor update introduces a new data call. These are routine shifts, but they can create data paths no one planned for.

In one case, a hospital patient portal included a Meta Pixel that collected appointment types and user identifiers from authenticated sessions and sent them to Meta without a BAA.

OCR’s guidance helps interpret situations like this and understand how tracking tools can interact with PHI.

Common challenges

Even with a clear roadmap, organizations run into the same set of friction points. First, Marketing teams often “own” tags and pixels and may see security review as slowing campaigns. An approval workflow, backed by leadership, reframes review as risk reduction rather than veto power.

Moreover, vendors may resist deeper monitoring or detailed data-flow descriptions; this is where contract language matters. If a partner will not accept visibility, that is itself a risk signal.

Another point of friction comes with privacy policies. Many are updated once a year, while the website changes every week.

And across all of this sits the coordination question. Discussions about ownership and budget often settle once teams see the tradeoff more clearly: steady visibility upfront, or more effort later to unwind misalignment.

Manual Approach vs. Feroot’s Automated Intelligence

Most organizations start by manually auditing their websites for data leaks. While this can identify obvious risks, it quickly becomes unsustainable as vendors update scripts, marketing deploys new tags, and pages change constantly.

Discovery and Monitoring

In a manual approach, security or privacy team inspects page source code, browser network logs, and tag manager configurations for each page type. The process repeats for every subdomain, authenticated area, and payment flow.

But that’s not the only issue. Because this work happens at a point in time, third-party updates or hidden fourth-party calls often slip past the review. And as a result, we get a snapshot that ages quickly as vendors ship new versions.

Feroot’s AlphaPrivacy AI and CodeGuard AI take a different approach. They discover first, third, and fourth-party scripts across the entire site in real time and keep watch as pages load, including authenticated portals and payment flows. Since script behavior changes and new domain connections are tracked as they happen, visibility stays current with each vendor update.

This means that full coverage takes minutes instead of days.

Data Flow Analysis

In a manual review, an analyst works through network traffic logs to see which domains receive data and then tries to connect those calls back to form fields or user actions. Each data type, like PII, PHI, payment information, gets classified by hand. Edge cases often need legal input, and the findings usually land in spreadsheets that drift as the site evolves.

Thus, it can take hours to analyze and classify a single page.

Feroot’s AlphaPrivacy AI and CodeGuard AI simplify that work. They read the page context, watch form fields, and review network requests to map how data moves end to end.

As a result, sensitive fields and external transmissions are identified automatically, using regulatory definitions for PII, PHI, and payment data. This way, it also maintains an audit trail of all data transmission with timestamps and content analysis.

Compliance Evidence

Manual Approach: Screenshots, exported logs, and narrative documentation are manually compiled when regulators inquire. Evidence becomes stale within weeks. Difficult to prove continuous monitoring between audits. Privacy policy accuracy is verified manually and infrequently. Time required: Days to assemble for regulatory inquiry

Feroot AlphaPrivacy AI and CodeGuard AI: Structured evidence exports ready for GDPR, HIPAA, PCI DSS, and CCPA audits. Pre-built reports showing script inventory, data flows, and vendor relationships. Real-time privacy policy validation against actual browser behavior. Audit trail proves continuous operation, not point-in-time compliance. Time required: Minutes to export audit-ready reports

The Bottom Line

For an organization with authenticated portals and payment flows, manual discovery and monitoring typically requires 80 to 120 hours for initial assessment and 60+ hours per quarter for ongoing audits. Feroot reduces this to 2-4 hours for initial integration and automated continuous monitoring, roughly an 85% reduction in compliance effort.

How Feroot Closes the Data Leak Gap

Feroot’s AlphaPrivacy AI and CodeGuard AI platforms provide the visibility and control regulators expect. The platforms continuously scan all pages to identify every script, pixel, and widget, analyze which data each tool accesses and where it transmits, automatically flag unauthorized collection or transmission to undisclosed domains, and maintain comprehensive logs for GDPR, HIPAA, PCI DSS, and CCPA compliance.

Real-time alerts notify teams immediately when new scripts appear on sensitive pages or when data flows change. Integration with tag managers across GTM, Adobe, and Tealium enables automated enforcement of governance policies. Evidence generation provides audit-ready documentation that proves continuous oversight, not annual attestations.

Request a Demo to see how Feroot can give your team calm, continuous visibility instead of guesswork.