Tell me if you’ve heard this one before: a company audits its checkout page and discovers 47 scripts running. Only 12 were approved. The other 35? A mystery, and a risk. Nobody knows who added them or whether they’ve been compromised.

That’s what we’re here to talk about today.

What You’ll Learn:

- Why authorization alone fails: Approved scripts get compromised at the source. Your approval process won’t save you.

- The inventory mistake that triggers audit findings: Even solid technical controls fail audits over incomplete documentation.

- What happens at 2am when vendors want you to disable SRI: Real frameworks are tested under operational pressure, not during implementation.

- How to reframe your QSA relationship: They’re partners whose reputation depends on your success. Most organizations waste this.

Download the Free PCI 6.4.3 and 11.6.1 Checklist

Why PCI DSS 6.4.3 exists

The hardest lesson in achieving PCI compliance is learning that your defenses are only as strong as your weakest assumption. For years, organizations built fortress-like network perimeters while assuming that approved JavaScript would remain trustworthy indefinitely. The payment card industry learned otherwise through devastating breaches that followed the same pattern: approved scripts, compromised at the source, harvesting payment data from within the trusted environment.

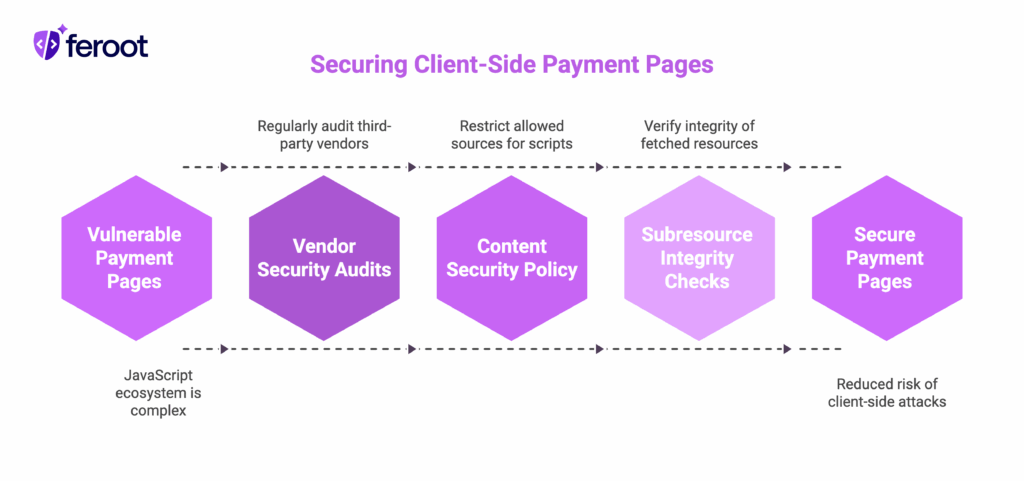

PCI DSS 6.4.3 emerged from this hard-won understanding. It acknowledges that client-side environments require active governance, not passive trust. The requirement establishes three foundational practices: authorization ensures only necessary scripts execute, integrity verification detects tampering, and comprehensive inventory provides visibility into your actual attack surface.

The evolution of client-side attacks

Modern payment pages exist in a complex ecosystem of interdependent JavaScript. Analytics track behavior, fraud detection analyzes patterns, marketing tools optimize conversion, customer support enables real-time assistance. Each script serves a legitimate business purpose, yet each represents a potential entry point for malicious code.

The sophistication has evolved considerably. Early attacks relied on obvious injection techniques. Today’s attacks target the supply chain itself, compromising legitimate vendors to distribute malicious code through trusted channels. The British Airways breach exemplified this: attackers didn’t break into BA’s systems directly. Instead, they compromised the Modernizr library that BA’s payment pages legitimately used.

This reflects deeper strategic insight by attackers. Rather than breaching well-defended organizational perimeters, they exploit trust relationships organizations maintain with vendors. The attack succeeds not through superior technical capability, but through patience and understanding how trust operates in complex systems.

IBM’s breach cost analysis shows financial services organizations losing over $4 million per incident, with client-side attacks representing the fastest-growing vector. The regulatory response has been equally significant: GDPR fines, CCPA enforcement, and PCI penalties create substantial financial consequences for inadequate client-side security.

Script authorization: Permission vs. trust

Effective authorization requires understanding the difference between permission and trust. Permission is explicit: a formal decision that a specific script may execute for a specific purpose. Trust is implicit: an assumption that approved scripts will continue to behave as expected. The authorization framework in 6.4.3 focuses on making permission explicit while reducing dependence on trust.

Business justification forms the cornerstone. Each script must serve a documented business purpose that justifies its presence on payment pages. Vague justifications like “improves user experience” reflect insufficient analysis. Specific justifications like “validates payment form inputs according to PCI DSS requirements” demonstrate genuine understanding of business necessity.

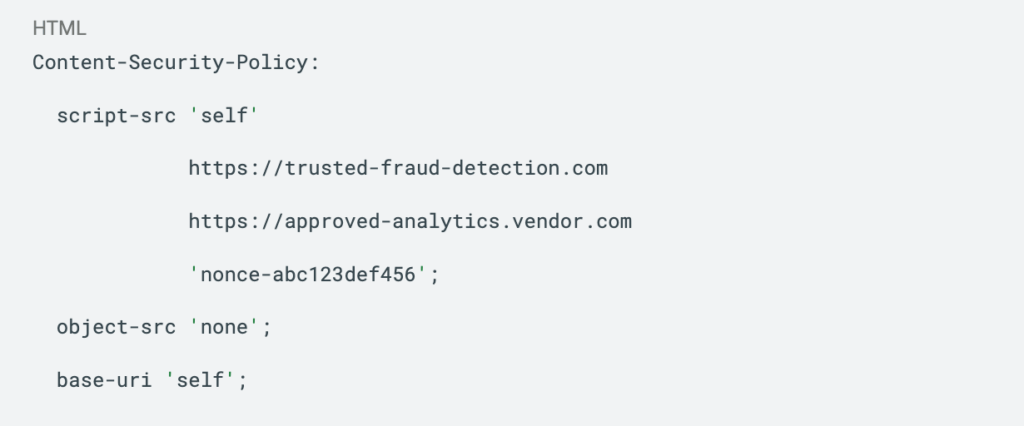

Consider this CSP implementation for payment pages:

This policy explicitly permits scripts from your own domain, two specific trusted vendors, and inline scripts with a specific nonce. Everything else is blocked by default. The policy reflects conscious decisions about what code is permitted rather than accepting whatever happens to be present.

However, authorization frameworks must account for dynamic reality. Scripts change versions, vendors update functionality, business requirements evolve. The authorization process must be sustainable and adaptable rather than rigid and brittle.

Integrity verification at scale

Authorization establishes what scripts may execute, but integrity verification ensures approved scripts haven’t been compromised. Without integrity checks, your authorization process only documents which scripts were legitimate when you approved them, not whether they’re still trustworthy today. The distinction is crucial: authorization is a one-time decision, integrity verification is ongoing validation.

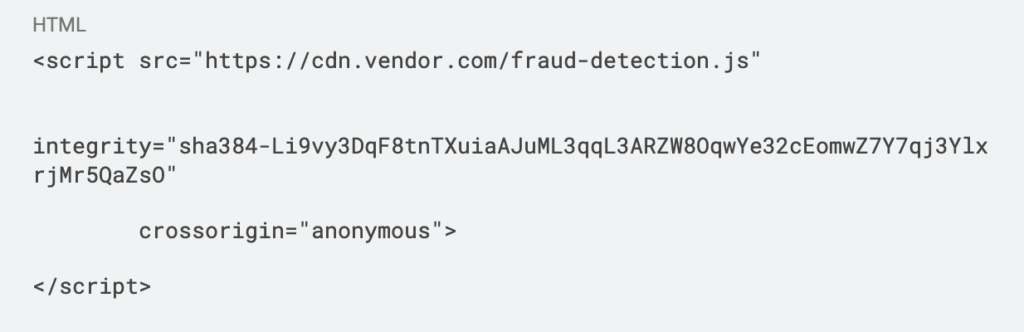

Subresource Integrity provides the most straightforward approach for external scripts:

The hash represents the exact expected content. If the vendor’s CDN is compromised and the script modified, the browser refuses to execute it, effectively preventing the attack.

Managing SRI at scale requires coordination with vendors and internal processes. Vendors must provide advance notification of script updates along with new hash values. Internal processes must update SRI hashes in production without disrupting payment processing. This operational complexity is significant but necessary.

Here’s what nobody tells you: the real challenge isn’t implementing SRI. It’s managing the vendor relationship when their script breaks your checkout page at 2am and they want you to temporarily disable integrity checking “just this once.” This is where authorization frameworks either hold or collapse.

The inventory: More than documentation

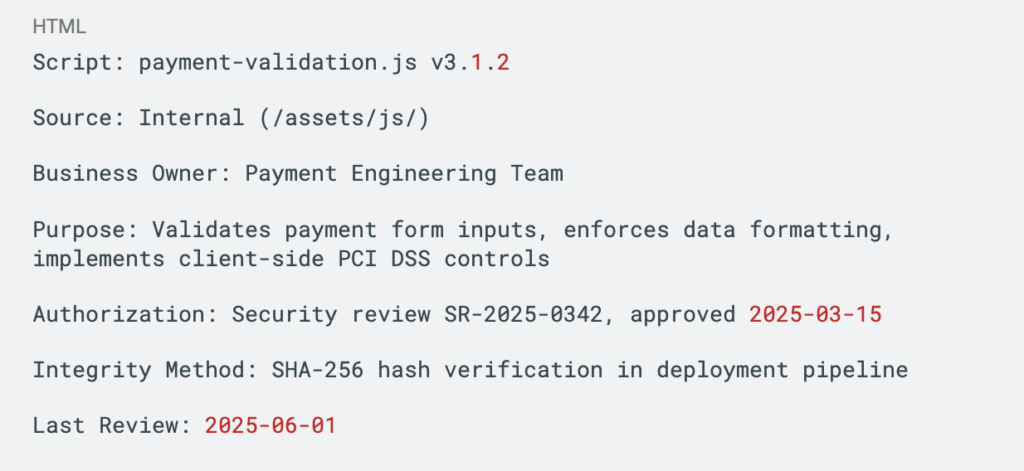

The script inventory serves multiple purposes: compliance documentation, security intelligence, operational reference. An effective inventory provides complete visibility while supporting both audit requirements and ongoing security operations.

Each entry should capture sufficient detail to support informed decision-making:

This level of detail enables informed risk assessment and provides auditors with evidence of thorough analysis. Most organizations implement automated discovery tools that regularly scan payment pages and identify discrepancies between documented inventory and actual deployment. This documentation becomes critical when your QSA reviews your compliance posture.

Working with your QSA

Many organizations mistakenly view their QSA as an adversary. This perspective creates unnecessary friction and often results in audit outcomes that could have been avoided through better collaboration.

Your QSA serves as a partner in achieving compliance, not as an opponent seeking to create problems. Their professional reputation depends on delivering accurate evaluations that help organizations achieve genuine security improvements while meeting regulatory requirements.

Engage with your QSA early in your implementation. Schedule preliminary discussions during planning, well before formal assessment begins. Share your implementation approach, proposed solutions, documentation strategy. Most QSAs welcome these conversations because they lead to more efficient assessments and better outcomes.

Be transparent about areas where you have questions. If you’re unsure whether your script inventory format meets requirements, ask. If your integrity verification approach differs from common implementations, discuss whether it satisfies underlying control objectives. These conversations prevent surprises during actual assessment.

The long-term perspective

PCI DSS 6.4.3 represents more than a compliance requirement. It’s a framework for sustainable client-side security in an environment where traditional perimeter-based approaches are insufficient. The practices it establishes provide value that extends well beyond payment card protection.

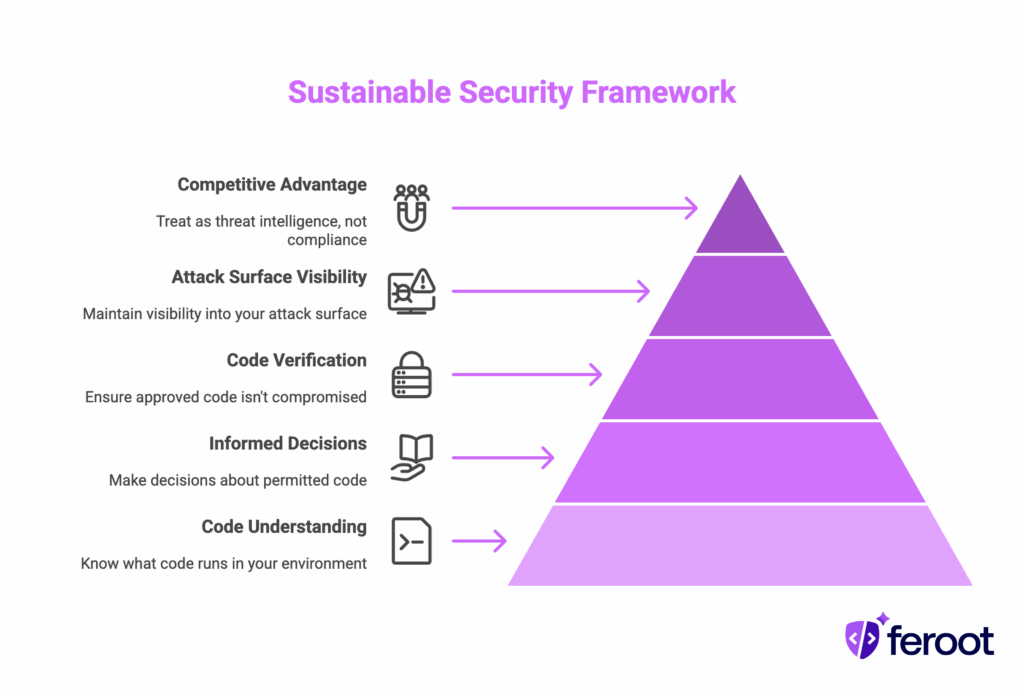

The threat landscape will continue evolving, but the fundamental principles remain sound: understand what code runs in your environment, make informed decisions about what should be permitted, verify that approved code hasn’t been compromised, and maintain visibility into your actual attack surface.

Success ultimately depends on treating 6.4.3 as an operational security practice rather than a compliance checkbox. Organizations that implement it most effectively recognize its value for protecting customer data and business operations, not just for satisfying auditor requirements. When approached with this perspective, compliance becomes a source of competitive advantage rather than regulatory burden. Your competitors are still treating 6.4.3 as a checkbox. You’ll be treating it as threat intelligence.